Prefer to listen instead? We’ve made an audio version of this newsletter so you can catch the highlights on the go:

Letter from the Editor

Dear readers,

Thank you so much for the wonderful feedback about my newsletter this past month. The inaugural edition was my pleasure to write for all of you, and I hope you can use it in your daily practice and when advising your business partners.

There is something magical about fall that gets me energized every year. Maybe it’s the crisp air during those perfect football Saturday tailgates or watching my kids head back to school with fresh notebooks and big plans. Perhaps it’s those college campus visits where you can feel the academic energy in the air, or simply the way autumn makes everything feel like a fresh start. Whatever it is, fall absolutely rocks for refocusing and getting back to business.

Just like students settling into new routines, regulators are hitting their stride this season too. They have clearly been busy over the summer, as you will see in the September edition of “In Case You Missed It.”

Key themes that are top of mind for me right now include:

- Enforcement actions focused on protecting children online.

- Cracking down on unfair and deceptive subscription practices, including making cancellation difficult and imposing junk fees.

- The continued collaboration between federal and state agencies on major enforcement initiatives.

- Encouraging clients to look closely at data security practices given some recent breaches affecting platforms.

We are seeing an interesting dynamic where states continue to lead the charge on AI legislation while federal regulators take a more measured approach. Given these developments, I encourage you to take a fresh look at your business practices for compliance, particularly anything related to online sales and customer interactions. Think of it as your own back-to-school audit!

I have also sent a link to our data security interactive resource. Feel free to use it to discuss these issues with your organization.

I hope you enjoy this month’s newsletter, and more importantly, you get to enjoy some time outside and with your friends and family.

I am here if you want to bounce ideas off me or discuss potential issues before presenting them to your business clients.

Here is to a productive fall season ahead!

Susan

ARTIFICIAL INTELLIGENCE

Overview

- Federal Government Continues to Take a Deregulatory Approach and the States Continue to Regulate. The Federal Government, through its AI Action Plan, continues to prioritize rapid innovation through regulatory elimination, streamlined data center permitting, and expanded energy infrastructure. We are seeing some efforts to create laws to address high-risk issues, including companion chatbots. States continue to introduce and enact legislation with a focus on regulating companion chatbots, getting attention in August

- Enhanced Regulatory Scrutiny Demands Proactive Compliance. The FTC’s comprehensive investigation of seven major AI companies signals a fundamental shift toward preemptive oversight, while multistate enforcement actions targeting deepfakes and companion chatbots demonstrate that attorneys general (AGs) will aggressively use existing consumer protection laws to hold AI companies accountable to require businesses to implement robust safety measures and transparent practices.

- Lawsuits and Settlements Provide Businesses with Critical Insights. Anthropic’s copyright settlement sets a precedent that while AI training on copyrighted material constitutes fair use, companies must acquire content through legitimate channels. The OpenAI chatbot lawsuit reinforces the need for businesses to prioritize responsible AI development and deployment to avoid liability exposure and tragic outcomes.

Federal AI Developments

AI Action Plan. The AI Action Plan, released in July 2025, outlines over 90 federal policy actions across three core pillars designed to achieve “global AI dominance” accelerating innovation through deregulation and removing ideological bias from AI systems, building American AI infrastructure, including streamlining data center permitting and expanding energy generation, and leading international diplomacy by exporting American AI technology to allies while strengthening export controls against adversaries like China. The plan represents a dramatic shift from the Biden administration’s safety-focused approach, instead prioritizing rapid development, removing regulatory barriers, and ensuring American workers benefit from AI advancement while preventing foreign competitors from accessing sensitive technologies. The White House plans to implement each action within six months to a year.

CHAT Act. Senator Jon Husted introduced the “Children Harmed by AI Technology (CHAT) Act of 2025.” If enacted, the law will require age verification systems, parental consent for minors accessing AI chatbots, and parental notification if children discuss self-harm or suicide.

Senator Cruz’s AI Regulatory Sandboxing Initiative. Senate Commerce Chair Ted Cruz has introduced legislation to create regulatory sandboxes for AI companies and establish contained federal testing environments for AI software. To participate, companies would apply through the White House Office of Science and Technology Policy for regulatory waivers or modifications after demonstrating their products in controlled spaces. The sandboxing program would grant waivers for renewable two-year periods (maximum of 10 years in total), with the entire program sunsetting after 12 years. While regulatory sandboxes already operate in countries like Singapore, Brazil, and France, Cruz is working to secure Democratic sponsors for bipartisan support, building on existing momentum from a separate regulatory sandboxing bill for AI use in the financial sector.

FTC’s Comprehensive AI Chatbot Investigation. The Federal Trade Commission (FTC) launched a significant Section 6(b) inquiry on September 11, 2025. Section 6(b) gives the FTC authority to gather information from businesses to understand potential antitrust or consumer protection issues. Seven major technology companies providing AI companion chatbots received the 6(b) order including: Alphabet, Character AI, Instagram, Meta, OpenAI, Snap, and X.AI, regarding their AI companion chatbot offerings. The inquiry focuses on child safety measures and potential negative impacts on minors. Specifically, the FTC seeks information about the companies’ monetization models, technical operations, content moderation processes, safety testing, risk mitigation for children and teens, user communications and disclosures, compliance monitoring, and data practices. This regulatory action represents a fundamental shift in AI oversight philosophy, with the FTC taking a proactive approach and conducting studies to understand problems before they escalate rather than responding reactively. The action also signals that child safety is a primary driver of AI regulatory change, possibly marking the end of an era of unregulated AI experimentation. Companies have been given 45 days to respond. The inquiry is likely to result in additional guidance from the FTC in early 2026 on the AI safeguards that the industry should implement.

State AI Developments

States continue to enact AI legislation, with all 50 states introducing various AI-related measures. This month’s key themes include facial recognition and surveillance technology, companion chatbots, and AI use in healthcare.

Colorado AI Act Delayed. Colorado lawmakers pushed the effective date of the Colorado AI Act to June 30, 2026. Lawmakers met in August for a special legislative session with the hopes of amending the Act. The efforts fell apart when lawmakers could not reach consensus, and the Colorado Chamber of Commerce withdrew support. Lawmakers plan to revisit the issue in January 2026.

Texas. Texas AI and technology regulations were effective on September 1, 2025. The new laws include HB 150, establishing the Texas Cyber Command, and HB 3133 and SB 2373, which require social media platforms to implement complaint procedures for AI deepfakes and scams.

California. California implemented comprehensive employment regulations that take effect on October 1, 2025, The regulations address automated decision systems under FEHA, covering AI use in hiring, interviews, and job advertising while extending record retention requirements and expanding employer liability for third-party AI vendors. During the session, lawmakers voted against several AI regulation bills, including measures preventing AI-driven rental pricing, algorithmic price fixing, and requiring data center energy disclosure, while

State AI Healthcare Regulation. Six States have passed comprehensive legislation regulating AI in healthcare, including Arizona, Maryland, Nebraska, Texas, California, and North Dakota. The laws prohibit using AI alone to deny medical necessity claims or prior authorizations without physician review, require AI-assisted decisions to avoid discrimination against particular patient groups, and mandate decisions be based on individual rather than group data. Several states enacted specific restrictions on AI in mental health services: Illinois prohibits AI systems from providing therapy unless conducted by licensed professionals, Nevada restricts AI from representing itself as a mental health provider with civil penalties up to $15,000, and Utah requires mental health AI chatbots to disclose their artificial nature while prohibiting the sale of user health information to third parties. Over 250 bills targeting AI in healthcare have been proposed across state legislatures, with additional requirements including disclosure mandates where providers must inform patients when AI is used in healthcare services, and Texas requiring providers using AI for diagnostic purposes to review all AI-generated information before entering it into patient records, demonstrating states’ proactive approach to managing AI’s expanding role in medical decision-making and patient care.

Multistate Responses

Swift State Response to Companion Chatbots. State concerns over companion chatbots have intensified since the Garcia lawsuit against Character.AI following a teenager’s suicide, with a similar case filed against OpenAI last month (see summary below). States have responded swiftly: New York enacted the first law requiring AI companion safeguards effective November 5, 2025, mandating crisis service referrals; Virginia Attorney General Jason Miyares joined a bipartisan coalition of 43 attorneys general demanding stronger minor protections; and California Senator Steve Padilla pushed Senate Bill 243, creating comprehensive safeguards and private rights of action for families. States are leveraging existing consumer protection and product liability laws while developing broader solutions. OpenAI has responded with ChatGPT changes, including parental controls for teens, model retraining, and providing connections to certified therapists.

- Multi-State Enforcement Efforts Against Deepfake Technology. On August 26, a bipartisan coalition of 47 state attorneys general, co-led by Massachusetts AG Andrea Campbell, issued coordinated letters demanding that major technology companies intensify efforts to combat deepfake images. The coalition urged search engines to implement content restrictions similar to existing policies for harmful searches and asked payment platforms to deny services to sellers connected to deepfake NCII tools and remove such sellers from their networks. The coordinated AG approach demonstrates that AGs are unwilling to wait for legislative solutions and will leverage state consumer protection and other existing laws to address AI-related harms.

AI Litigation

Anthropic Copyright Settlement. Anthropic, the company behind the Claude AI chatbot, is settling a major lawsuit with authors who alleged it illegally used their books to train its AI models without permission. Judge William Alsup held that using copyrighted materials to train AI models constitutes transformative fair use, reasoning that AI used the books to learn patterns and techniques to create something new and different and was not simply copying them. Judge Alsup, however, rejected Anthropic’s argument that downloading over 7 million books from illegal pirate websites instead of purchasing or licensing was acceptable for research purposes. Facing potential damages of up to $150,000 per book, Anthropic chose to settle the case rather than risk a jury trial. This settlement establishes an important legal principle. the method of acquisition matters just as much as what they do with the content afterward. AI companies can train their models on copyrighted works; however, they must obtain that material through legitimate means (e.g., buying books, licensing content, or using freely available content).

PRIVACY

- State laws continue to proliferate, and compliance is costly and complex. October 1 marks the effective date of the Maryland data privacy law, and Texas extended its mini-TCPA requirements to SMS. The consequences of non-compliance can result in costly resolutions with regulators and private litigants.

- Common business technologies now carry significant legal risk. Facial recognition systems face BIPA violations, website tracking tools like Meta Pixel trigger wiretapping and ECPA claims, and data collection practices that were previously acceptable are generating massive class action settlements. Businesses should audit and consider modifying technology stacks to ensure compliance with stricter data minimization and consent requirements.

- GPC Signals. Three states worked collectively to target multiple businesses that failed to honor Global Privacy Control opt-out requests. Businesses should audit websites to ensure GPC signal and targeting is working.

Privacy and AI regulation moved to the center of business strategy in 2025, with states rapidly enacting new laws, including Maryland’s new privacy law. Key themes this month include increased teen data regulation, enhanced geolocation and biometric data restrictions, simplified opt-out mechanisms, and broader health data obligations.

Maryland Online Data Privacy Act (MODPA). The Maryland Online Data Privacy Act (MODPA) is effective on October 1, 2025. MODPA expands the definition of biometric data to cover any data used to authenticate consumer identity, prohibits selling sensitive personal data without consent, and provides stricter protections for minors by banning targeted advertising to anyone under 18 if controllers know their age. The law imposes stricter data minimization requirements and only allows for personal data collection when necessary. The law also has lower compliance thresholds for businesses controlling or processing data or deriving revenue from data sales. MODPA features narrower exemptions than other state laws, including no blanket exemptions for HIPAA-covered entities or most nonprofits. Maryland’s AG has exclusive enforcement powers with potential 60-day cure periods, and unlike other state privacy laws, MODPA does not explicitly preclude private rights of action, potentially allowing consumer lawsuits under other legal frameworks.

Multi-State Enforcement Privacy Enforcement – GPC: On September 9, 2025, California, Colorado, and Connecticut launched a coordinated enforcement sweep targeting businesses that fail to honor Global Privacy Control (GPC) opt-out requests, which are browser signals that automatically tell websites consumers want to opt out of personal data sales/sharing. This multi-state action represents a new trend in privacy enforcement, with regulators joining together to proactively investigate non-compliant businesses rather than waiting for complaints. This action is consistent with other recent actions, including a settlement with Honda. Businesses face penalties up to $20,000 per violation in Colorado, $7,500 in California, and $5,000 in Connecticut, plus reputational damage and litigation risk. Companies should immediately audit their websites to ensure GPC signal detection and processing, update privacy policies, and review internal workflows for automated opt-out handling across all applicable jurisdictions to avoid regulatory scrutiny in this priority enforcement area.

Oregon Consumer Privacy Act Report. Oregon released a first-year report on the Oregon Consumer Privacy Act (OCPA), showing 214 complaints received and 38 enforcement matters initiated. The majority of complaints involved online data brokers, with the most requested right being data deletion. The report noted positive industry response to cure notices, with most companies quickly updating privacy practices. OCPA’s nonprofit coverage started July 2025 and auto manufacturer requirements are effective this month.

Texas Senate Bill 140 (Mini-TCPA Amendment). Texas Senate Bill 140, effective September 1, 2025, expands the Texas Mini-TCPA by including SMS, MMS, and other text-based marketing communications as part of its definition of “telephone solicitation.” The law requires businesses making calls or sending texts to or from Texas to register, obtain a $10,000 surety bond, renew the registration annually, provide quarterly reporting, and adhere to specific calling hours. Failing to register may result in $5,000 per violation ($25,000 for willful violations) with each communication constituting a separate violation, plus potential Class A misdemeanor charges carrying up to one year in jail, and a $4,000 fine. The law also creates a private right of action under the Texas Deceptive Trade Practices Act, allowing consumers to sue directly for damages (treble damages available for intentional violations) and allows consumers to sue the same company multiple times.

Children’s Online Privacy Protection Act (COPPA)

FTC Disney COPPA Settlement. Disney paid $10 million to settle allegations that it violated the COPPA Rule by failing to properly label YouTube videos as “Made for Kids” and enabling the unlawful collection of children’s personal data for targeted advertising. Disney set audience designations at the channel level rather than reviewing individual videos, despite YouTube’s 2019 requirements. The settlement requires Disney to establish a 10-year Audience Designation Program to ensure proper video classification and to remain fully compliant with COPPA.

Google’s YouTube Settlement. Google agreed to pay $30 million to settle 2019 claims that its YouTube targeted advertising practices violated state laws by intruding on privacy and engaging in deceptive practices. The court found that Google’s targeting of children and collection of children’s data constituted a highly offensive privacy intrusion. The case followed Google’s earlier FTC settlement over COPPA violations and demonstrates the trend of class actions following regulatory enforcement actions using state consumer protection laws.

Biometric Privacy

GAO Facial Recognition Housing Report. A Government Accountability Office audit found that facial recognition systems in rental housing lack adequate federal oversight, despite growing adoption by landlords and public housing agencies. While the technology provides security benefits by restricting unauthorized access, GAO identified risks, including discriminatory errors and unchecked surveillance. The audit criticized HUD for providing only vague guidance to housing agencies, with many uncertain about proper consent procedures, data retention, and accuracy requirements. This guidance may be useful for companies offering rental housing and using facial recognition technologies.

Home Depot Biometric Lawsuit. A Chicago resident filed a class action lawsuit against Home Depot, alleging that it was using facial recognition technology at self-checkout kiosks without customer consent, violating Illinois’ Biometric Information Privacy Act (BIPA), and seeking up to $5,000 in damages for each violation. The plaintiff, Jankowski, noticed facial recognition displays indicating data collection but found no warning signs in the store, highlighting BIPA’s requirement for businesses to obtain written consent before collecting biometric identifiers. The case builds on the precedent set in the 2019 Rosenbach v. Six Flags decision, which established that individuals could sue for unlawful biometric data collection even without proving actual misuse, reflecting growing consumer discomfort with unauthorized biometric data collection and the critical importance of transparency, as compromised biometric data cannot be changed like passwords.

Johnson & Johnson Neutrogena Settlement. Johnson & Johnson’s former consumer subsidiary settled a class action over the Neutrogena Skin360 app, which allegedly collected facial scans without informed consent in violation of Illinois’ BIPA. The app used AI-driven facial analysis for personalized skincare assessments, but its data practices drew scrutiny under one of the strictest biometric privacy laws in the U.S. The settlement terms remain confidential, but the case highlights growing regulatory risks for beauty companies using AI-driven diagnostic tools.

Geolocation

T-Mobile Loses Appeal on Sprint’s Geolocation Practices. On August 15, 2025, the DC Circuit upheld the FCC’s $92 million penalty against Sprint and T-Mobile for mishandling customer geolocation data. The court ruled that location information qualifies as protected Customer Proprietary Network Information (CPNI) under the Communications Act, and that the carriers failed to implement adequate safeguards when selling this data to third-party aggregators. Despite learning that unauthorized parties, including bounty hunters, had accessed customers’ sensitive location information, the carriers continued their data-sharing practices without proper verification systems. The court rejected the carriers’ arguments that these actions were not unlawful, finding instead that the FCC’s interpretation of the statute was reasonable and that the carriers had received fair notice of their obligations to protect this sensitive data. Although the courts continue to be split after the Lopez case, in this instance, the DC Circuit did give deference to the agency.

Google Privacy Lawsuit Award. A court ordered Google to pay $425 million in a class action lawsuit for misleading 98 million users about data collection through its “Web & App Activity” setting. Despite users disabling the setting, Google continued collecting data through Firebase, a database monitoring activities across 1.5 million apps. The case revealed Google’s dual data collection system and internal communications showing the company knew it was being “intentionally vague” about data practices to avoid alarming users.

Apitor Technology Settlement. Chinese toy manufacturer Apitor agreed to settle FTC and DOJ allegations of COPPA violations involving geolocation data collection from children under 13 for $500,000 (suspended based on financial condition). The company’s app incorporated third-party Software Development Kit (SDK) components that collected data without parental notification or consent. The settlement requires the deletion of improperly collected data and a comprehensive review of all third-party software components for COPPA compliance.

Adtech

Illinois AdTech ECPA Claims. The US Central and Northern District Courts of Illinois issued two decisions on motions to dismiss filed by defendants on the same day in late August. Both cases allege that the plaintiffs violated the Electronic Communications Privacy Act (ECPA) by using a Meta Pixel or similar tool to intercept communications without consent. Although the courts reached different conclusions, one denying the motion with leave to amend and the other granting it, the decisions continue to signal a willingness to interpret ECPA in a plaintiff-friendly manner. These decisions distinguish Illinois courts from other jurisdictions that have been less receptive to such privacy claims, which only adds to the legal risks organizations face when using common web analytics tools. A finding of ECPA violations can be costly, given that the law sets the civil penalty at $10,000 per violation. This trend is similar to the cases alleging violations of wiretapping laws. Businesses should continue to audit and adjust their use of website advertising technologies, provide disclosure and require consent before using the online technologies, and update terms of use and data privacy policies.

Cybersecurity

Sixth Circuit Upholds FCC Data Breach Order. The U.S. Court of Appeals for the Sixth Circuit recently upheld data breach reporting requirements issued by the Federal Communications Commission in 2023 in its August 13, 2025, 2-1 decision in Ohio Telecom Ass’n v. FCC. Over a vigorous dissent, the court took an expansive view of FCC authority under Section 201(b) of the Communications Act, holding that the plain language permits the FCC to regulate any broadly construed practice undertaken “in connection with [a] communications service,” including breach notifications. The decision also turned on a narrow reading of the Congressional Review Act, which Congress had invoked in 2017 to reject similar requirements from a 2016 FCC Order. The 2023 Data Breach Order applied to broadly defined customer “personally identifiable information” beyond statutorily defined categories, and this ruling could pave the way for aggressive FCC regulatory activity on data privacy and cybersecurity. Notably, now-Chairman Carr had dissented from the original order’s issuance, arguing the FCC lacked authority and that the CRA barred it.

Florida AG Investigates Home Camera Company’s Chinese Links. The Florida AG is investigating Lorex, a Canadian home security camera company, questioning its ties to the Chinese government as part of a consumer protection investigation into possible foreign spying risks. The AGs office wants to learn more about Lorex’s relationship with Dahua Technology, a video surveillance company headquartered in China, expressing concern that data might be shared with the Chinese military. The Florida AG is requesting documents related to Lorex’s corporate structure, ownership, third-party manufacturing and software contracts, marketing claims, FCC filings, and contracts with Florida retailers selling their products. The investigation follows Dahua’s sale of Lorex in 2023 after the parent company landed on a U.S. Entity List, and subsequent links by U.S. lawmakers connecting Dahua to human rights abuses.

Massachusetts Data Security Settlement. Massachusetts AG secured a $795,000 settlement with Peabody Properties for data security violations following five cybersecurity breaches between 2019 and 2021 affecting nearly 14,000 consumers. The property management company allegedly delayed required breach notifications and failed to maintain reasonable security practices. The settlement requires comprehensive cybersecurity enhancements, including multi-factor authentication, vulnerability management, and annual independent security assessments for three years.

Salesforce Data Breach Lawsuit. A class action lawsuit was filed against Salesforce following a May 2025 data breach that compromised the personal information of over 1.1 million Farmers Insurance customers. The complaint frames the breach as a “hub and spoke” breach, with Salesforce as the hub cloud provider and Farmers and other clients as the spokes. The complaint alleges that the breach exposed highly sensitive personal information, which leaves impacted customers vulnerable to fraud. The breach allegedly occurred through compromised access tokens or phishing by threat actors to steal login credentials. Since Salesforce provides cloud services to hundreds of companies, the actual number affected could be much higher, with other victims including Google, Cisco, Adidas, Louis Vuitton, and others. The plaintiff alleges negligence, breach of contract, and violations of California’s Unfair Competition Law. This breach raises critical issues for businesses and vendors. Businesses must still notify impacted customers for vendor actions and defend the litigation that results from the notice. This reinforces the message that businesses should ensure that they have strong indemnification provisions and that they do not limit liability for data breaches or ensure that carve-outs adequately cover potential liability.

Miscellaneous

FTC Warning on EU/UK Digital Laws. FTC Chairman Andrew Ferguson warned major tech companies, including Google, Apple, Meta, and Microsoft, about maintaining U.S. consumer privacy protections despite pressure from foreign governments to weaken such protections. The warning specifically addressed concerns about the EU Digital Services Act, UK Online Safety Act, and UK Investigatory Powers Act. Ferguson emphasized that companies remain subject to FTC Act prohibitions against unfair and deceptive practices even when complying with foreign regulatory demands.

MARKETING AND CONSUMER PROTECTION

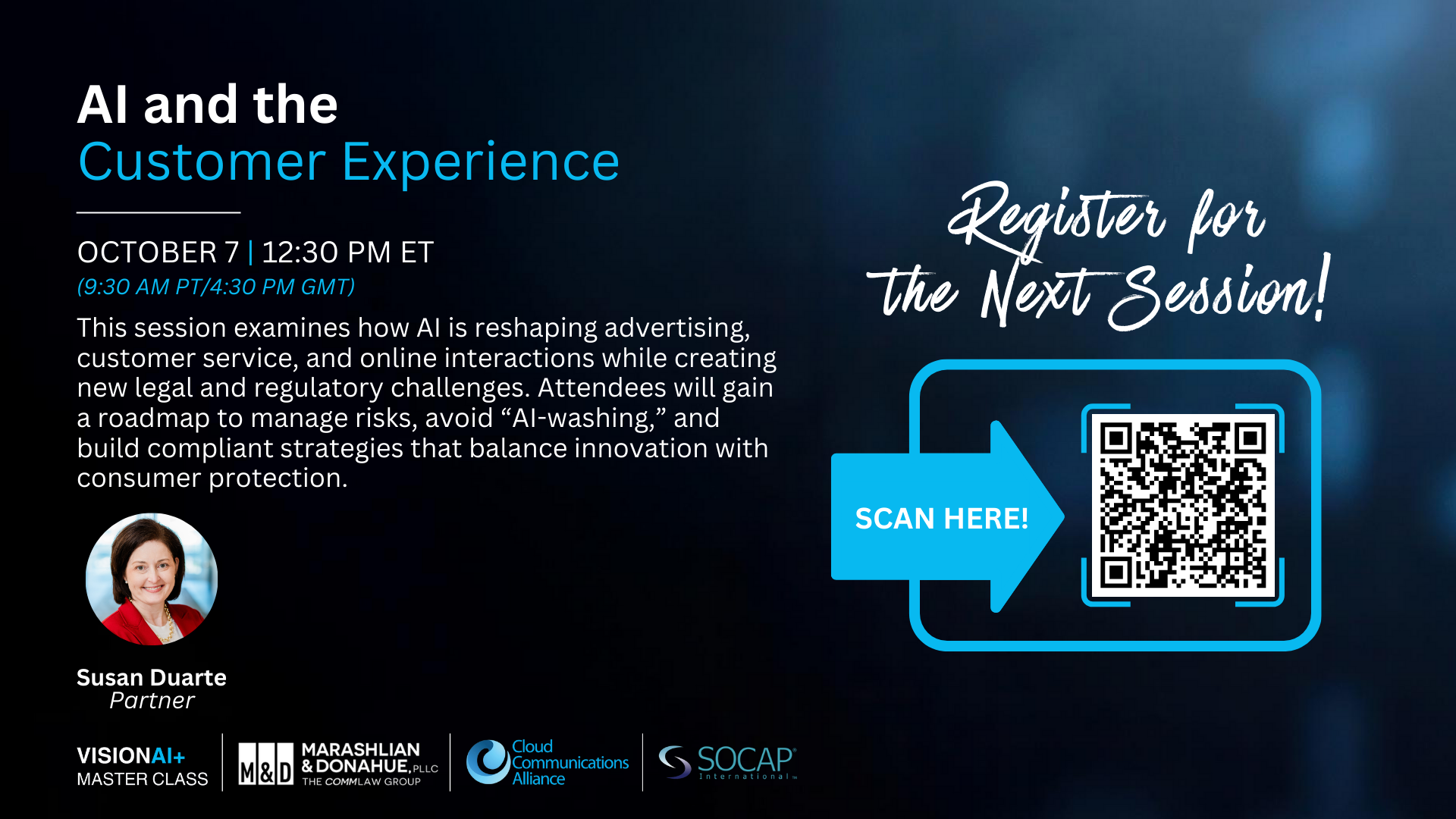

Learn more and register for my upcoming VisionAI+ Master Class on AI and the Customer Experience: How Businesses Should Rethink Advertising, Customer Service, and Online Interactions in the Age of Artificial Intelligence

The key themes this month for marketing and consumer protection include:

- Subscription Sign Up and Cancellation Practices. The FTC was laser-focused on enforcing its subscription rules this month, entering into settlements with Chegg, Match Group, and LA Fitness over obstructive cancellation practices and failures to disclose material terms prior to purchase. The FTC joined the Texas AG and private litigants to target drip pricing practices, where mandatory fees are only disclosed at checkout. Businesses should take a hard look at their purchase process and subscription cancellation procedures and make necessary adjustments to ensure transparency and mitigate risks.

- Autorenewal Laws. States continue to implement auto-renewal requirements or propose legislation. The laws are all unique, and businesses must take care to comply with federal and all state laws.

- FTC Enforcement. The FTC’s entered into seven settlements this month. Notably, it continues to enforce violations of rules that have been overturned relying on other laws (e.g., its Click-to-Cancel Rule) or that it seemingly abandoned (e.g., the Noncompete Rule). This trend sends a strong signal to industry that the FTC is still active, will not hesitate to enforce using all laws and remedies available to it, and businesses should not de-prioritize compliance with its rules.

Subscription Practices

Massachusetts Autorenewal Law. Massachusetts auto-renewal regulations were effective on September 2, 2025. The rules require clear and unavoidable disclosures before purchase about recurring charges, the inclusion of specific calendar dates for free trial cancellations, advance renewal notices, and straightforward cancellation methods. These requirements exceed many current auto-renewal laws by mandating specific disclosure formatting and timing requirements, particularly for free trials, reflecting a growing national trend toward stricter consumer protection in subscription services. Violations of the law will be enforced under the Massachusetts Consumer Protection Law.

Michigan Auto-Renewal Legislation. Michigan’s House introduced HB 4826, requiring clear disclosure of auto-renewal terms in consumer contracts lasting over a month. The bill mandates 14-point type disclosures, detailed information about terms and pricing, renewal notices 30-60 days before cancellation deadlines, six-month subscription reminders, easy cancellation procedures, and no penalties for end-of-term cancellations. This legislation follows a growing trend of stricter state-level regulations on subscription practices.

Chegg Subscription Cancellation Settlement. The FTC reached a $7.5 million settlement with Chegg for making subscription cancellation difficult through buried links and confusing processes, while continuing to bill nearly 200,000 consumers after they requested cancellation. The settlement establishes that cancellation must be as easy as sign-up and requires simple cancellation mechanisms plus consumer refunds, signaling that the FTC views “simple cancellation” as operational compliance rather than merely policy guidance.

Match Group to Pay $14M. Match Group entered into a $14M settlement with the FTC to resolve allegations of deceptive advertising, unfair billing practices and obstructive cancellation procedures. The 2019 complaint alleged that Match offered a free six-month subscription for not meeting someone special without disclosing stringent requirements, complicated the cancellation process, and suspended accounts of customers who disputed bills. The settlement requires clear disclosure of “Guarantee” terms, protection against retaliation, simplified cancellations, and compliance reporting.

LA Fitness Cancellation Policies. The FTC sued Fitness International (LA Fitness) for allegedly charging “hundreds of millions” in unwanted recurring fees due to difficult cancellation processes requiring in-person visits or mail-in forms. With 3.7 million members across 600+ locations, the company required complex procedures, including printing forms, limited in-person hours, and difficulty finding managers to process cancellations. Despite claims of now offering online cancellations, the FTC alleges these options remain inadequate and seeks court orders prohibiting the conduct and customer refunds.

Junk Fees

Booking.com Junk Fees Settlement. Booking Holdings agreed to a $9.5 million settlement with Texas over junk fee practices, including “drip pricing” that advertised low hotel rates but added mandatory charges like resort and amenity fees only at checkout. The settlement requires Booking to fully disclose all mandatory fees upfront. This represents the largest Texas state-level settlement against an online travel agency for deceptive pricing.

TaskRabbit Hidden Fees Lawsuit. A proposed class action lawsuit alleges that TaskRabbit misrepresents freelance service rates by hiding “Trust and Support” fees until checkout. The lawsuit claims these fees provide no additional value, vary inconsistently across transactions, and constitute deceptive “drip pricing” in violation of California law and federal guidance on misleading advertising. The plaintiff was charged undisclosed fees of $28.88 and $10.76 on top of advertised hourly rates for cleaning services.

Live Nation/Ticketmaster Federal Lawsuit. The FTC and seven state attorneys general sued Live Nation and Ticketmaster for “bait-and-switch” pricing practices, displaying low list prices then adding mandatory fees averaging 30% or more at checkout. The lawsuit also alleges the companies allowed ticket brokers to circumvent purchase limits through thousands of accounts. This demonstrates coordinated federal-state enforcement against drip pricing and highlights that publicly stated policies must match actual practices.

FTC Sues Ticket Broker Over Alleged Scheme. The FTC sued Key Investment Group and its executives for allegedly circumventing Ticketmaster’s safeguards against bulk ticket purchases. The operation reportedly used thousands of fake accounts, virtual credit cards, proxy IP addresses, and SIM boxes to mask identities, purchasing nearly 380,000 tickets over 14 months at a cost of $57 million and reselling them for approximately $64 million. For one Taylor Swift concert, they allegedly used 49 accounts to secure 273 tickets, far exceeding Ticketmaster’s six-ticket limit.

Air AI False and Deceptive Advertising. The FTC sued Air AI Technologies for allegedly deceiving small business owners with false promises about their “conversational AI” tools, causing losses up to $250,000 per consumer. The company allegedly marketed business coaching materials and support services with promises of earning tens of thousands in days, while rarely honoring their money-back guarantees when customers requested refunds. The lawsuit alleges violations of the FTC Act.

First INFORM Act Enforcement Against Temu. The FTC ordered Temu operator Whaleco, Inc. to pay a $2 million civil penalty in its first enforcement of the INFORM Consumers Act. Temu allegedly failed to provide adequate mechanisms for consumers to report suspicious marketplace activity and lacked required seller disclosures in its “gamified” shopping experiences. The settlement requires implementing a user-friendly reporting system, clear seller disclosures, and ongoing compliance reporting for 10 years.

Non-Compete Rule

FTC Abandons Noncompete Rule Appeal but Solicits Public Comment on Non-Compete Clauses, Continues its Enforcement Efforts, and Sends Warning Letters to Healthcare Providers. The FTC formally abandoned appeals of its proposed nationwide ban on noncompete agreements after losing court challenges in Texas and Florida. The Commission voted 3-1 to dismiss the appeals, confirming the sweeping rule approved in April 2024 will not take effect. Despite abandoning the rule, the FTC created a Joint Labor Task Force to coordinate enforcement strategies, launched a public inquiry into noncompete clauses (responses must be filed by November 3, 2025), and entered into a settlement with Gateway Services Inc., which requires Gateway to release 1,800 employees from noncompete obligations. FTC Chairman Andrew Ferguson also issued warning letters to healthcare employers and staffing firms, urging them to review employment agreements for compliance with antitrust laws. The letters target unreasonable noncompete agreements affecting healthcare professionals like nurses and physicians, which can limit employment options and patient choices. Despite withdrawing its nationwide noncompete ban, the FTC remains committed to enforcing antitrust laws against noncompete under Section 5 of the FTC Act, encouraging all employers to ensure restrictions on employee mobility comply with the law.

OF NOTE – Critical Need for Data Breach Mitigation & Response Planning

Click here to access the interactive Data Breach Guide for Legal Counsel

Not only was this month busy for regulators, but it was also busy for cybercriminals. From Salesforce, Farmers Insurance, Gucci, Jaguar, Tiffany & Co., to PowerSchool, AirFrance-KLM, and TransUnion, cyber criminals made it clear that no industry is off-limits. As these incidents demonstrate, the question is not if but when your organization will face a data security incident. This month, I am sharing a visual and interactive data breach guide to help you shore up or create your data mitigation and response plans.

Why this important for any organization:

- Financial impact. According to IBM’s Cost of a Data Breach Report, the average cost of a data breach can exceed $4.4M, with legal expenses, notification costs, and regulatory fines representing significant portions of this figure.

- The regulatory landscape is increasingly complex. With over 50 different data breach laws, GDPR, CCPA, and industry-specific requirements, the notification obligations following a breach create a complex compliance web that must be navigated within extremely tight timeframes.

- Legal exposure continues to grow. Courts are increasingly recognizing data breach claims, with recent class actions surviving dismissal motions based on theories of negligence, breach of contract, and statutory violations.

- Incident response determines liability. Organizations with documented, tested response plans face significantly lower litigation risks and regulatory penalties than those responding ad hoc to incidents.

The interactive guide provides a framework for establishing proper protocols. You can review it with your IT security, privacy, and risk management teams to assess your current preparedness against these benchmarks. Without established mitigation and response protocols, your organization will be forced to make critical decisions under extreme pressure, with incomplete information, and against ticking regulatory clocks – often as short as 72 hours.